Analysis of the Impact of MiniMax's Open-Sourced OctoCodingBench on the AI Programming Competitive Landscape

Unlock More Features

Login to access AI-powered analysis, deep research reports and more advanced features

About us: Ginlix AI is the AI Investment Copilot powered by real data, bridging advanced AI with professional financial databases to provide verifiable, truth-based answers. Please use the chat box below to ask any financial question.

Based on information obtained through research, I will systematically analyze the impact of MiniMax’s open-sourced OctoCodingBench evaluation benchmark on the competitive landscape of the AI programming track.

On January 14, 2026, MiniMax officially open-sourced the OctoCodingBench evaluation benchmark, a professional evaluation benchmark for Coding Agents [1]. Assessment results based on this benchmark reveal several key insights:

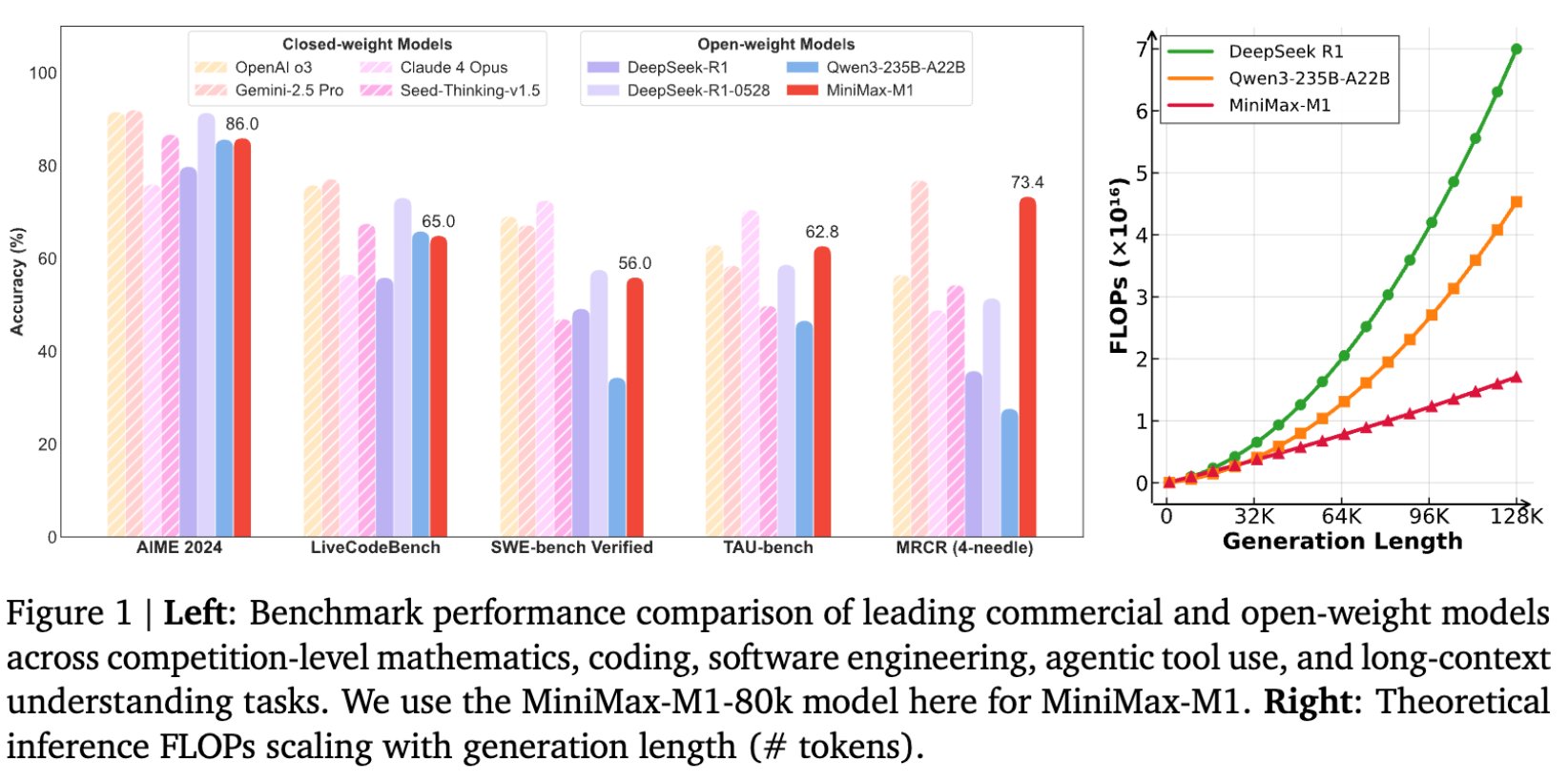

Combined with the release information of MiniMax M2.1, this model has surpassed Claude Sonnet 4.5 and Gemini 3 Pro in multilingual programming scenarios, and is close to the level of Claude Opus 4.5 [2]. In specific scenarios such as public test case generation, code performance optimization, code review (SWE-Review), and instruction compliance (OctoCodingBench), MiniMax M2.1 shows comprehensive improvements compared to the M2 version [2].

- Eliminating Evaluation Bias: A unified evaluation standard makes performance comparisons between different models more objective

- Promoting Technological Iteration: Clear performance indicators help developers identify model shortcomings and guide technical optimization directions

- Accelerating Industry Consensus: A unified evaluation standard helps the industry form a consensus definition of an “excellent AI programming model”

According to the evaluation results, open-source models are rapidly catching up with closed-source models [1]. This trend indicates that:

- Narrowing Technological Gap: The collaborative model of the open-source community is accelerating model iteration

- Lowering Competition Threshold: The open-sourcing of a high-quality evaluation benchmark lowers the entry barrier for latecomers

- Accelerating Innovation: Standardized evaluations promote rapid technological iteration and optimization

The current competition in the AI programming market shows obvious characteristics:

- Saturated Consumer-Side (C-End) Market: Products like Cursor have taken a first-mover advantage, with their Annual Recurring Revenue (ARR) reportedly surging from $1 million in 2023 to $65 million in November 2024 [3]

- Huge Potential of the Enterprise-Side (B-End) Market: Enterprise-level demand has started to boom, and startups such as Ciyuan Wuxian have achieved a score of 79.4% on SWE-Bench Verified, setting a new State-of-the-Art (SOTA) record [3]

The issue of “process compliance remaining a blind spot” revealed by OctoCodingBench is of great significance to the enterprise market:

- Compliance Requirements: Industries such as finance and healthcare have strict compliance requirements for code generation processes

- Legacy System Adaptation: Enterprises’ complex legacy systems require more intelligent solutions

- Quality Assurance Mechanisms: Enterprises need more robust code quality assurance systems

- Instruction-Following Stability: Address the issue of instruction-following capability degradation in long-haul tasks

- Full-Stack Construction Capability: MiniMax also open-sourced the VIBE evaluation benchmark, which covers five core subsets: Web, simulation, Android, iOS, and back-end [2]

- Agent Generalization: Stable performance across different frameworks has become a key indicator

The shift from traditional benchmark testing to the “Agent-as-a-Verifier (AaaV)” paradigm [2] means that evaluation criteria are shifting from result-oriented to process-oriented.

- Horizontal Expansion: Extending from programming tools to hardware (AI glasses, AI phones, etc.) [5]

- Vertical Deepening: Deepening from general-purpose tools to enterprise-level solutions

- Ecosystem Construction: Platformization and ecologicalization have become mainstream trends

- Tool + Platform Model: Meeting enterprise needs through standardized integration and personalized adaptation [3]

- Value-Oriented: Shifting from focusing on technical indicators to measuring actual business value [3]

MiniMax’s open-sourcing of the OctoCodingBench evaluation benchmark has multi-dimensional impacts on the competitive landscape of the AI programming track:

- Standardization Impact: Established evaluation criteria more aligned with real-world development scenarios

- Competition Catalyst: Accelerated competition between open-source and closed-source models, with the technological gap continuing to narrow

- Market Guidance: Revealed gaps in production-grade applications, guiding the industry to deepen into enterprise-level scenarios

- Chinese Influence: Strengthened China’s position in global AI programming competition

- Focus on improving instruction-following stability in long-haul tasks

- Strengthen the construction of process compliance capabilities

- Pay attention to adaptability in multilingual and multi-scenario environments

- Pay attention to the matching degree between evaluation indicators and actual needs

- Evaluate the enterprise-level service capabilities of suppliers

- Establish an AI programming implementation strategy suitable for your own needs

- Focus on AI programming companies with enterprise-level service capabilities

- Emphasize the balance between technological innovation and commercialization capabilities

- Track opportunities brought by the evolution of evaluation criteria

[1] Sina Finance - “MiniMax Announces Open-Sourcing of a New Evaluation Benchmark for Coding Agents” (https://finance.sina.com.cn/7x24/2026-01-14/doc-inhhfvzc7374594.shtml)

[2] OSCHINA - “MiniMax M2.1: Multilingual Programming SOTA, Built for Complex Real-World Tasks” (https://www.oschina.net/news/391562)

[3] 36Kr - “Former ByteDance Tech Lead Launches Startup to Build Enterprise-Level Coding Agent Platform” (https://m.36kr.com/p/3616436553696514)

[4] 36Kr - “Epoch AI Year-End Report: GPT-5 Controversy, Open-Source Catch-Up, and Capability Leaps Reveal Accelerated AI Development” (https://eu.36kr.com/zh/p/3610391154230534)

[5] Sina Finance - “2025 Review: From Models to Hardware, from Burn Money to Generate Revenue, AI Applications Stage a ‘Battle for Entrances’” (https://finance.sina.com.cn/roll/2026-01-03/doc-inheytmy8566242.shtml)

Insights are generated using AI models and historical data for informational purposes only. They do not constitute investment advice or recommendations. Past performance is not indicative of future results.

About us: Ginlix AI is the AI Investment Copilot powered by real data, bridging advanced AI with professional financial databases to provide verifiable, truth-based answers. Please use the chat box below to ask any financial question.