Goldman Sachs Deploys Autonomous AI Agents for Accounting and Compliance Functions

Unlock More Features

Login to access AI-powered analysis, deep research reports and more advanced features

About us: Ginlix AI is the AI Investment Copilot powered by real data, bridging advanced AI with professional financial databases to provide verifiable, truth-based answers. Please use the chat box below to ask any financial question.

Related Stocks

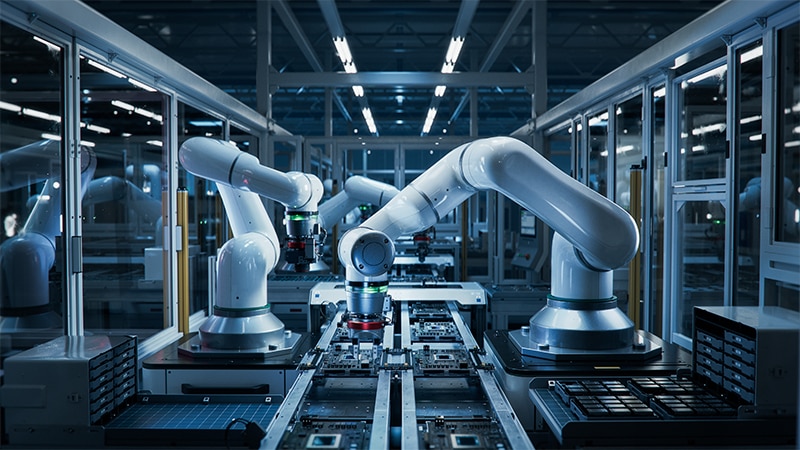

On February 6, 2026, Goldman Sachs unveiled its deployment of autonomous AI agents powered by Anthropic’s Claude model, representing one of the most significant integrations of generative AI into core financial services operations by a major Wall Street institution [1]. The partnership model goes beyond traditional vendor relationships, featuring a six-month embedding of Anthropic engineers within Goldman’s technology teams to co-develop AI agents specifically designed for complex, rule-based financial tasks [3]. According to Goldman Sachs Chief Information Officer Marco Argenti, the goal is to “speed up tasks that involve massive amounts of data without investing in more manpower,” positioning AI not merely as a cost-cutting mechanism but as a capability multiplier that enables the firm to scale operations efficiently [3].

The targeted applications span transaction reconciliation, trade accounting, and client vetting and onboarding — functions historically resistant to automation due to their regulatory complexity and the need for nuanced judgment [1][2]. The deployment coincides with Anthropic’s release of Claude Opus 4.6, which reportedly maintains low rates of misaligned behaviors while achieving the lowest rate of over-refusals among recent Claude models [5]. The enhanced model capabilities, including 1 million token context windows and improved agent coordination, have been specifically designed to enable enterprise-scale deployments in regulated industries [5].

The Goldman Sachs deployment exemplifies a broader industry movement toward “agentic AI” — autonomous systems capable of independent decision-making within defined parameters that represent a fundamental evolution from traditional robotic process automation (RPA) [6]. Unlike earlier automation approaches that operate through rigid, pre-programmed rules, AI agents can handle exception management, interpret unstructured data, and adapt to evolving regulatory requirements. Industry analysis indicates that AI agents in financial services are achieving 90%+ accuracy rates in document processing, data extraction, and compliance validation — substantially higher than both manual work and earlier rule-based automation approaches [7]. For fraud detection applications specifically, organizations deploying AI agents report 77% return on investment as systems identify patterns humans may miss while eliminating false positives [7].

The strategic rationale underlying Goldman Sachs’s approach reflects a calculus shared across Wall Street. As financial institutions face increasing pressure to enhance operational efficiency while managing regulatory compliance costs, AI agents offer a pathway to address high-volume, data-intensive processes without proportional headcount growth [3]. JPMorgan Chase has reportedly made significant accounting shifts reclassifying technology investments, while Citi expects banks will increasingly hire new roles including AI managers and compliance officers specializing in automated systems [2]. Deutsche Bank is also heavily integrating AI technology across its operations, indicating a competitive dynamic that is accelerating AI adoption across the industry.

The deployment of AI agents for compliance functions presents inherent tensions between regulatory demands and operational efficiency. Financial services operate under rigorous regulatory scrutiny that requires detailed audit trails, decision transparency, and accountability — the same characteristics that create operational complexity which AI agents are designed to address. Industry observers note that successful implementations require several critical governance components: embedded compliance frameworks providing regulatory guardrails governing every agent action; complete audit trails for all automated decisions; transparency controls showing agent reasoning and data sources; and human override capabilities with escalation paths for situations requiring human judgment [7].

These governance requirements create both challenges and opportunities for financial institutions. The complexity of deploying autonomous agents in regulated environments generates demand for specialized integration, governance, and compliance consulting services. However, institutions that successfully develop robust AI governance frameworks may achieve competitive advantages through demonstrated regulatory compliance and operational reliability. The timing of Goldman Sachs’s announcement coinciding with Claude Opus 4.6’s release suggests coordinated positioning to demonstrate enterprise-grade capabilities, with reports indicating the model update sparked significant market movements among software firms and their credit providers as investors reassessed winners and losers in the AI trade [2].

For Anthropic, the Goldman Sachs deployment represents significant validation of its enterprise AI strategy and comes at a time when the company is reportedly planning a multibillion-dollar fundraising that would value the Claude chatbot maker at approximately $350 billion [5]. The partnership provides high-profile evidence that Claude can meet the demanding requirements of global financial institutions, potentially accelerating adoption among competing financial services firms. The emergence of autonomous agents capable of replacing or significantly augmenting human decision-making in complex workflows represents what some analysts have termed a “SaaSpocalypse” — a fundamental disruption to traditional software-as-a-service business models [5]. Companies whose value propositions center on human-mediated workflows may face pressure to integrate agentic AI capabilities or risk competitive displacement.

The Goldman-Anthropic relationship exemplifies deepening partnerships between financial institutions and AI developers, potentially accelerating consolidation among AI vendors who can demonstrate enterprise-grade reliability and regulatory compliance. Financial services represent a high-value, high-complexity vertical for AI deployment with significant dollar impact, making successful deployments in this sector strong indicators of broader enterprise viability. The choice of Anthropic’s Claude over competing offerings from OpenAI or Google reflects strategic positioning in the AI model landscape, potentially hedging against vendor lock-in while accessing Anthropic’s emphasis on AI alignment and safety [1].

Goldman Sachs’s deployment of AI agents for accounting and compliance functions illuminates a fundamental shift in how financial institutions approach operational scalability. By targeting functions that involve processing massive amounts of data, the firm seeks to accelerate task completion without corresponding increases in manpower [3]. This approach represents a strategic calculus that many financial institutions are likely to evaluate, as the combination of increasing transaction volumes, evolving regulatory requirements, and margin pressure creates compelling efficiency drivers. The explicit targeting of accounting and compliance — functions historically resistant to automation due to regulatory complexity — signals confidence in Claude’s capabilities for high-stakes, rule-based decision making [1][2].

The implications extend beyond immediate operational efficiency to workforce evolution. Rather than wholesale job displacement, financial services roles will increasingly shift toward oversight, exception handling, and human judgment functions, with AI agents handling routine processing and standard cases. This transition requires institutions to develop workforce transition strategies that address both technical talent needs and organizational change management. Citi’s expectation that banks will increasingly hire AI managers and compliance officers specializing in automated systems reflects this evolving talent landscape [2].

The deployment of AI agents at scale creates cascading implications for technology and data infrastructure providers. Goldman Sachs Research’s projections indicate that power consumption from data centers will jump 175% by 2030 from 2023 levels, driven significantly by AI workloads [4]. This demand trajectory affects everything from chip manufacturers to utility providers and data center developers, creating investment themes across the technology supply chain. Effective AI agent deployment requires robust data infrastructure, governance, and accessibility — areas where institutions may have significant capability gaps that require targeted investment.

The complexity of deploying AI agents across existing financial systems also requires sophisticated integration capabilities and careful change management. Not all financial institutions have the technical sophistication or talent base to develop and deploy autonomous AI agents effectively, creating potential competitive advantages for early movers like Goldman Sachs. Smaller financial institutions may partner with AI vendors through consortiums or shared platforms to achieve economies of scale in AI deployment, potentially reshaping competitive dynamics within investment banking, wealth management, and consumer banking.

Goldman Sachs’s deep partnership with Anthropic distinguishes its approach from industry peers through several mechanisms: the six-month embedding of Anthropic engineers represents an unusually close collaboration model; the choice of Claude over competing offerings reflects strategic positioning; and the explicit targeting of high-complexity functions signals confidence in AI capabilities for mission-critical applications [1][3]. These strategic choices may create competitive moats while potentially limiting flexibility for institutions that have not established similar relationships.

The timing of the Goldman announcement coinciding with Claude Opus 4.6’s release suggests coordinated positioning to demonstrate enterprise-grade capabilities, with the model update reportedly sparking significant market movements among software firms and their credit providers [2]. As financial institutions intensify evaluation of AI agent solutions, Anthropic is likely to gain significant mindshare given the Goldman endorsement. However, the broader vendor landscape will see increased competitive pressure as both established players and emerging specialists vie for positioning in the agentic AI market.

The deployment of autonomous AI agents in regulated financial environments presents several categories of risk that warrant careful attention. Regulatory uncertainty represents a significant consideration, as the evolving regulatory landscape for AI in financial services creates both compliance costs and potential barriers to entry. Securities regulators and banking supervisors are expected to increase focus on AI governance frameworks, potentially issuing guidance or proposed rules governing autonomous systems in financial services. Institutions must establish governance frameworks that enable AI experimentation while maintaining appropriate controls and oversight while simultaneously preparing for potentially enhanced regulatory scrutiny.

Model reliability and safety present inherent risks when deploying autonomous systems in high-stakes financial environments. Deploying such systems requires extremely high confidence in model reliability, safety, and alignment with organizational objectives. The governance requirements for autonomous AI agents operating in regulated environments include embedded compliance frameworks, complete audit trails, transparency controls, and human override capabilities — each representing both a technical challenge and a compliance obligation [7]. Integration complexity and existing system compatibility also create execution risks, as deploying AI agents across legacy financial systems requires sophisticated capabilities and careful change management.

Data infrastructure limitations may constrain effective AI agent deployment for institutions lacking robust data governance and accessibility frameworks. The quality and availability of training data, real-time data access for agent operations, and data security requirements all represent potential constraints on deployment scope and effectiveness. Workforce transition risks include potential talent shortages in AI governance, model risk management, and financial technology expertise, as well as organizational change management challenges associated with shifting human roles toward oversight functions.

The Goldman Sachs deployment creates several opportunity windows for various industry participants. Financial services executives should assess readiness for AI agent deployment in accounting, compliance, and operations functions, evaluating AI vendor capabilities with emphasis on governance, auditability, and regulatory alignment [4]. Organizations that successfully develop robust AI governance frameworks may achieve competitive advantages through demonstrated regulatory compliance and operational reliability.

Technology vendors have opportunities to develop deep integration partnerships rather than transactional relationships, building governance and oversight capabilities into AI agent platforms from inception. The complexity of deploying autonomous agents in regulated environments creates demand for specialized integration, consulting, and compliance services, representing growth opportunities for professional services firms and technology providers alike. The emergence of agentic AI may accelerate consolidation among AI vendors while creating disruption for traditional software providers, with winners likely to include AI infrastructure providers, successful AI deployers, and governance/oversight technology vendors.

For investors, the Goldman Sachs announcement represents a significant data point in assessing AI adoption trajectories across industries. The shift toward autonomous agents may create opportunities in AI infrastructure, successful AI deployers, and governance technology vendors, though regulatory and execution risks remain significant considerations. Industry standards development will likely create both compliance requirements and competitive differentiation opportunities, with institutions and vendors that proactively engage with standards bodies potentially achieving positioning advantages.

The deployment of autonomous AI agents by Goldman Sachs using Anthropic’s Claude model represents a pivotal development in the financial services industry’s adoption of generative AI technology. This initiative moves beyond experimental use cases into mission-critical back-office operations, targeting accounting, compliance, and operational finance functions with autonomous systems capable of complex decision-making within defined parameters. The six-month partnership model featuring embedded Anthropic engineers demonstrates a collaborative approach to AI development that may become increasingly common as institutions seek to customize solutions for regulated environments.

Industry analysis indicates AI agents in financial services are achieving 90%+ accuracy rates in document processing and compliance validation, with 77% return on investment reported for fraud detection applications [7]. These performance metrics suggest meaningful efficiency gains are achievable, though governance requirements including audit trails, transparency controls, and human override capabilities are essential for regulatory compliance [7]. The competitive dynamics are likely to accelerate AI adoption across the industry, with smaller institutions potentially partnering through consortiums to achieve economies of scale.

Goldman Sachs Research projects data center power consumption will increase 175% by 2030 from 2023 levels, driven significantly by AI workloads, indicating substantial infrastructure investment requirements [4]. The collaboration positions Anthropic for potential significant market gains, with the company reportedly planning a fundraising that could value it at approximately $350 billion [5]. Regulatory frameworks will likely evolve to address AI-mediated financial operations, creating both compliance obligations and competitive differentiation opportunities for institutions that proactively develop robust governance capabilities.

Insights are generated using AI models and historical data for informational purposes only. They do not constitute investment advice or recommendations. Past performance is not indicative of future results.

About us: Ginlix AI is the AI Investment Copilot powered by real data, bridging advanced AI with professional financial databases to provide verifiable, truth-based answers. Please use the chat box below to ask any financial question.